What are Purpose-Built AI Pipelines

This one is for the nerds

In my previous article There was AI before LLMs... and there will be after, I walked through different AI model types: encoders, traditional NLP, decoders, sequence-to-sequence models, and classic ML.

Let me show you how they solve the problems that plague generic LLM deployments.

Case Study: Communication Pattern Analysis

Let’s take a concrete example that’s directly relevant to my startup AINovva’s work of generating knowledge graphs based on information found across emails, documents, calendar events. A consultant is working across multiple client engagements when they get a Slack message from a key stakeholder: “Can you give me an update on where we are with the project deliverables? I have a stakeholder meeting in an hour.”

The consultant now needs to quickly synthesize scattered information about meetings, Slack threads, and email updates in time to synthesize context and generate an informed summary for the client.

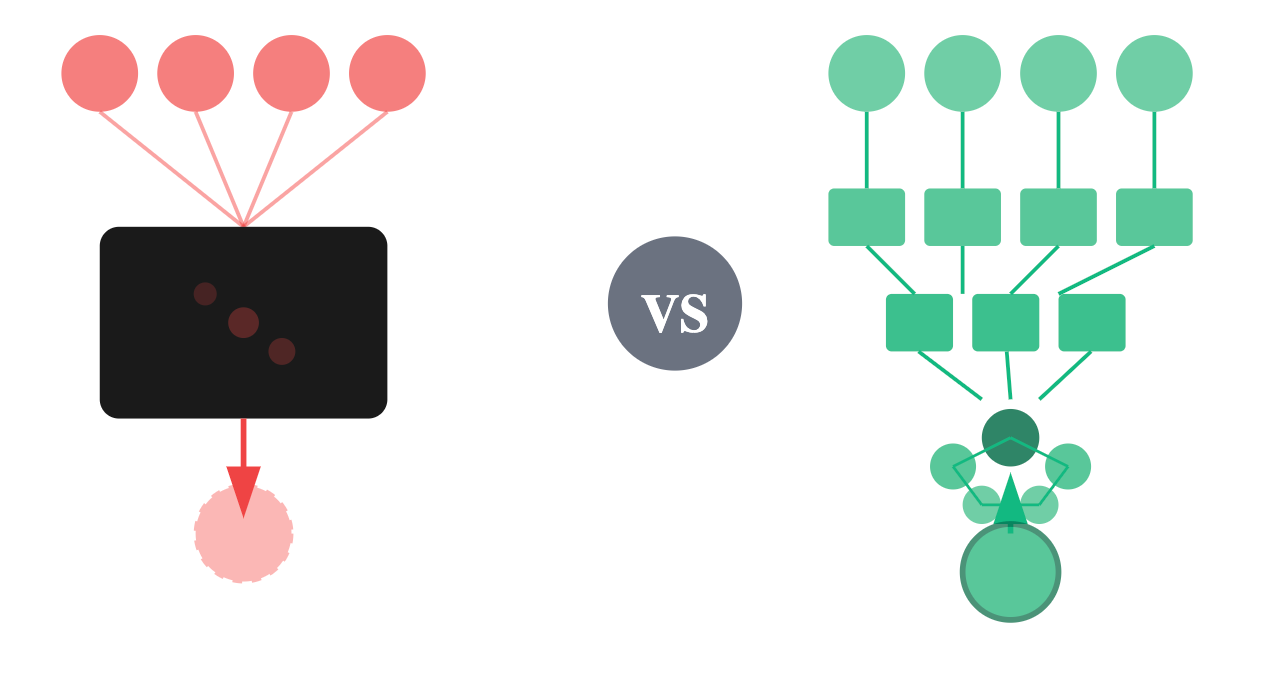

If they choose the LLM approach (loading stuff into ChatGPT or asking Gemini), this is what it might look like:

1. Copies last 10 emails + pastes 5 document excerpts + recent calendar events into the system

2. Prompts GPT-4 or Gemini: “Summarize project progress for Client X”

3. GPT-4/Gemini processes and returns summary (high cost per query due to large unstructured content)

4. Consultant reviews, realizes it missed the scope change discussion from Slack

5. Adds more content, re-queries

6. Reviews summary generated by LLM for accuracy, but doesn't have time to verify all the information within the time before the client's meetingThe problems that make this fail under pressure:

Context window limitations: Can’t send everything relevant, so you’re guessing what matters

Manual assembly required: Consultant is doing the hard work of finding the relevant content

Inconsistent results: Different prompting or content selection gives different answers

Privacy risk: All your client’s confidential project details went to the LLM’s servers

Time pressure failure: The review required to trust the response takes a long time, it’s tempting to forego it

Cost under stress: Adding more content costs more tokens, rerunning costs more tokens

The Purpose-Built Pipeline Approach: